It's been awhile, but I figured out the hubbub behind compute shaders!

In all honesty, based on its taking of information and creating a pipeline to read in data and output a stored image, it's essentially post-processing. It's interesting how a quad no longer has to be rendered separately at least; it makes it a little more convenient (and heavily not so to have to create compute pipelines but it's all about the graphics processing, not necessarily readability).

Shown are a few simple effects, including edge sharpening and detection from previous projects and an embossing shader.

An account of pain, struggle, and amusing discoveries found in a man's quest for game programming style and finesse.

Sunday, October 9, 2016

Saturday, October 1, 2016

The Same Thing, Only Harder

Man, progress certainly goes by a crawl when you only make work on the side on the weekend.

It's been awhile, but Vulkan sure knows how to up the ante on 3D models. The basics are very similar to 3D extrapolation of the 2D I was already doing, and I used a .ktx model for the satellite.

The difference? Well, the triangle was originally a set of points, so automatically loading VertexInputStates, buffers, device memory, and storing the information such as vertices and indices in contiguous memory structures was key. Definitely more than a triangle's worth!

And it's worth it; I'm also quite grateful to the creators of Assimp to make the mesh loading side so painless, even with model types like .ktx:

3D models covered! Now to see what else I can do.

It's been awhile, but Vulkan sure knows how to up the ante on 3D models. The basics are very similar to 3D extrapolation of the 2D I was already doing, and I used a .ktx model for the satellite.

The difference? Well, the triangle was originally a set of points, so automatically loading VertexInputStates, buffers, device memory, and storing the information such as vertices and indices in contiguous memory structures was key. Definitely more than a triangle's worth!

And it's worth it; I'm also quite grateful to the creators of Assimp to make the mesh loading side so painless, even with model types like .ktx:

3D models covered! Now to see what else I can do.

Saturday, August 27, 2016

Hiatus

Apologies for the late (really late) reply; work has been in full swing and nothing is allowed to be bloggable.

What will this mean? Progress will be down to a crawl (couple of hours on the weekends; I gotta enjoy life as well) but will continue. Keep posted for any progress! I promise it will be visual.

Until then, I'll be working on a base application class for Vulkan.

What will this mean? Progress will be down to a crawl (couple of hours on the weekends; I gotta enjoy life as well) but will continue. Keep posted for any progress! I promise it will be visual.

Until then, I'll be working on a base application class for Vulkan.

Friday, August 19, 2016

Nearly There

Whew! Managed to construct all the basic components necessary for the example. Now I just need to create a base class for that example (and any others that may follow).

It's mind-boggling to see how much a low-level API really requires from people in terms of not just knowledge, but sheer self-structuring and (3000+ lines of code). Seems like the input wasn't anything special though; the direction just looks like it's following basic keycodes.

Ah well, I liked using them anyways.

It's mind-boggling to see how much a low-level API really requires from people in terms of not just knowledge, but sheer self-structuring and (3000+ lines of code). Seems like the input wasn't anything special though; the direction just looks like it's following basic keycodes.

Ah well, I liked using them anyways.

Thursday, August 18, 2016

UI Is Its Own Beast

Yeah, I forgot that. The big components for today were the mesh loading class (which uses assimp, always a top open source choice) and the UI text overlay, which simply does thinks like update for FPS and the like. Thing is, it also requires its own set of buffers, descriptors(especially for the changing text), image objects, command buffers, the works. Seriously, the only thing we could really take out of the thing was composing the device on as a reference.

Soon we shall be back to a main program of sorts at hand! I'll also see what secrets lie in input for this application as well (moving around). It may even be better than DirectInput!

Soon we shall be back to a main program of sorts at hand! I'll also see what secrets lie in input for this application as well (moving around). It may even be better than DirectInput!

Wednesday, August 17, 2016

The Parts Add Up

Construction continues! Mainly parts on a swap chain and debug tools. The example I'm following also supports linear tiling instead of using a staging buffer, but it's heavily unlikely I'll be using it.

I'm also concerned if using C-style casts is a good idea for void*; I hear a lot that reinterpret_cast isn't a good idea, but I'm also hard-wired to prefer the new C++ casts.

And now working on a texture loader class! I'm actually quite happy that such complex details like image, image memory, and samplers can all be wrapped up in a single object. If only Vulkan followed a similar structure; it seems that they have the parts all there but they could use to be wrapped up nicely.

I'm also concerned if using C-style casts is a good idea for void*; I hear a lot that reinterpret_cast isn't a good idea, but I'm also hard-wired to prefer the new C++ casts.

And now working on a texture loader class! I'm actually quite happy that such complex details like image, image memory, and samplers can all be wrapped up in a single object. If only Vulkan followed a similar structure; it seems that they have the parts all there but they could use to be wrapped up nicely.

Tuesday, August 16, 2016

Component by Component

One of the main reasons why I had to start over from the ground up instead of carefully find out what the problem was (other than just not knowing how) was me not slicing the application (Vulkan) into enough components.

Some of the main ones I focused on today was a buffer object to hold things such as the buffer, device memory, and a reference to the device in question. This was linked to the object that I was also working on, an instance that holds both physical and logical device instead of keeping them separate.

One of the more interesting and helpful methods I learned was to actually make up for the heavy code writing for setting up structs by actually creating initializing functions for them. They had none of their own, so instead of

struct s = {};

s.a = 1;

s.b = 2;

s.c = 3;

...

Or {1, 2, 3}, since the members got a bit tricky to understand and a constructor (not present for the structs) would come in handy.

This is still a fairly lengthy process, so I definitely won't get anything on the screen until I'm absolutely sure it works.

Some of the main ones I focused on today was a buffer object to hold things such as the buffer, device memory, and a reference to the device in question. This was linked to the object that I was also working on, an instance that holds both physical and logical device instead of keeping them separate.

One of the more interesting and helpful methods I learned was to actually make up for the heavy code writing for setting up structs by actually creating initializing functions for them. They had none of their own, so instead of

struct s = {};

s.a = 1;

s.b = 2;

s.c = 3;

...

Or {1, 2, 3}, since the members got a bit tricky to understand and a constructor (not present for the structs) would come in handy.

This is still a fairly lengthy process, so I definitely won't get anything on the screen until I'm absolutely sure it works.

Monday, August 15, 2016

Failure Once More

Yeugh, that's what I get for following a tutorial that doesn't have an established project. I get all the way to model construction and nothing shows.

Turns out I'll have to start from square one, but by following some more proper examples that work. Who knows? Maybe it'll work out better this time. But right now, I nor the source code can explain why a blank screen shows instead of a model.

Turns out I'll have to start from square one, but by following some more proper examples that work. Who knows? Maybe it'll work out better this time. But right now, I nor the source code can explain why a blank screen shows instead of a model.

Sunday, August 14, 2016

Texturing in the Deep

Today managed a couple more simple topics through the excessive complexity of Vulkan.

First off, texture mapping! The image is a bit different from buffers, which required a different set of functions for copying, creating, and transferring image objects. I also had to create a sampler object and combine the layout with the uniform buffer object to make sure it got read through the shader. Luckily enough, the texture coordinates weren't too difficult to add to the shader. Voila!

I also added some quick linear filtering and texture addressing for repeating textures:

We can even quickly multiply a color! But this is simple enough once we get past setting up the proper image object, memory, and view:

But wait! What's this:

The left object is actually behind the right; this means I need to setup depth calculations, which includes testing for the correct depth-stencil format and setting up the proper depth image view along with the swap chain image views when presenting to the screen. That way, we can use the z-buffer to show the correct pixel at the correct depth:

We need some 3D models, though! Time to tackle some model loading.

A shout out to the stb library and the tinyobjloader library for containing single header files instead of static library linking! It definitely makes it easier for multiple platforms and configurations.

First off, texture mapping! The image is a bit different from buffers, which required a different set of functions for copying, creating, and transferring image objects. I also had to create a sampler object and combine the layout with the uniform buffer object to make sure it got read through the shader. Luckily enough, the texture coordinates weren't too difficult to add to the shader. Voila!

I also added some quick linear filtering and texture addressing for repeating textures:

We can even quickly multiply a color! But this is simple enough once we get past setting up the proper image object, memory, and view:

But wait! What's this:

The left object is actually behind the right; this means I need to setup depth calculations, which includes testing for the correct depth-stencil format and setting up the proper depth image view along with the swap chain image views when presenting to the screen. That way, we can use the z-buffer to show the correct pixel at the correct depth:

We need some 3D models, though! Time to tackle some model loading.

A shout out to the stb library and the tinyobjloader library for containing single header files instead of static library linking! It definitely makes it easier for multiple platforms and configurations.

Friday, August 12, 2016

In Space

Phew, the information is nearly overloading my brain with how much Vulkan requires to even get a proper world-view-projection matrix setup in the uniform buffer. The uniform buffer follows a similar setup to the vertex and index setup, but a resource descriptor has to be created for data that updates frame by frame. This also requires an in-depth setup with the pool of memory in which the descriptor set came from. Yikes!

However, this means that we can now present an object moving in space! I also found a tip to use std::chrono to easily use that as frame-time when measuring time-based movement. Though a flipping square doesn't need too much adherence to time-based movement, it is a helpful method:

As for actual textures, watch out! I'm now working on an image object. The image object works much like a buffer, but has slightly different bindings and setups. I will keep using the stb library they recommended; it works very well and only requires a header file (instead of that static-linked SOIL).

However, this means that we can now present an object moving in space! I also found a tip to use std::chrono to easily use that as frame-time when measuring time-based movement. Though a flipping square doesn't need too much adherence to time-based movement, it is a helpful method:

As for actual textures, watch out! I'm now working on an image object. The image object works much like a buffer, but has slightly different bindings and setups. I will keep using the stb library they recommended; it works very well and only requires a header file (instead of that static-linked SOIL).

Thursday, August 11, 2016

Wait, a Square

Such wonders a buffer can hold! Today was mainly spent working with buffers; these objects are similar to the DX11 buffers, except we can use a staging buffer to allocate them from high performance memory then transfer them to longer-lived buffers. In this way, I can change input data without having to re-do the whole shader and compilation process:

However! Not all is set for buffers; the index buffer is also available in a similar format, which works very much so like index drawing for DX11:

What's next? Resource descriptors! They are required to setup free access to resources during drawing such as buffers and images. What buffer? A uniform buffer, which means world/view/projection fun times!

However! Not all is set for buffers; the index buffer is also available in a similar format, which works very much so like index drawing for DX11:

What's next? Resource descriptors! They are required to setup free access to resources during drawing such as buffers and images. What buffer? A uniform buffer, which means world/view/projection fun times!

Wednesday, August 10, 2016

Actual Imagery

Phew! After all these days of setting up the framework, I finally got a thing going on the screen!

It's also resizable, which means I had to properly setup the GLFW callback and recreate the swap chain (along with all other things connected to it) after the swap chain images were invalidated by a resize.

After creating the command buffers, I also had to create synchronization objects. With Vulkan, we could either use fences or semaphores, so I used a semaphore for image availability and render finishing. This also requires waiting if anything (like swap chains) in the system had to be changed during program running.

And with that, the image is finally presented to the screen! Let's try actual input data this time.

It's also resizable, which means I had to properly setup the GLFW callback and recreate the swap chain (along with all other things connected to it) after the swap chain images were invalidated by a resize.

After creating the command buffers, I also had to create synchronization objects. With Vulkan, we could either use fences or semaphores, so I used a semaphore for image availability and render finishing. This also requires waiting if anything (like swap chains) in the system had to be changed during program running.

And with that, the image is finally presented to the screen! Let's try actual input data this time.

Tuesday, August 9, 2016

Piping that Line

Phew, the extra components required to setup graphics programming never ends! I'm getting much more in depth to the process of drawing a triangle than I ever felt possible!

I pulled in the necessary components for fixed functions (input assembly, rasterization, color blending) and also had to make a render pass that would inform Vulkan about the framebuffers it would be attached to.

Then I had to make the framebuffers! And the whole pipeline object. Everything's static for now, as a dynamic array would be necessary to specify to the pipeline what would change over time. Otherwise I'd have to recreate the pipeline.

And now onto command pools and command buffers! Because an API known for multithreaded wonder has to setup the render commands with a specific pool of multithreadable memory somehow.

I pulled in the necessary components for fixed functions (input assembly, rasterization, color blending) and also had to make a render pass that would inform Vulkan about the framebuffers it would be attached to.

Then I had to make the framebuffers! And the whole pipeline object. Everything's static for now, as a dynamic array would be necessary to specify to the pipeline what would change over time. Otherwise I'd have to recreate the pipeline.

And now onto command pools and command buffers! Because an API known for multithreaded wonder has to setup the render commands with a specific pool of multithreadable memory somehow.

Monday, August 8, 2016

Rising From the Ashes

Wowza, I totally forgot that because I was using educational software, they had to RIP it away from me in a computer wipe. I did manage to come back, though! I keep most of my big projects intact so they may live on through my website, and I did a similar gesture for my current Vulkan work.

As for my current Vulkan work? Since today was a lot of installations, I mainly had time to setup image views (which were also encapsulated in RAII objects, but I totally forgot) and start working on the shader pipeline.

Very interesting tidbit - shaders are actually read in as bytecode through Vulkan programs. The actual compilation of the shaders into .sfv bytecode (SPIR-V's the name) is actually done separate from the code. This ensures much quicker loading than kid-friendly GLSL/HLSL. However, I can still use GLSL shaders as long as they are compiled into SPIR-V format.

According to this pipeline, I can fully program vertex/geometry/fragment shaders and tessellation, and also control quite a bit of the color blending/rasterization processes.

As for my current Vulkan work? Since today was a lot of installations, I mainly had time to setup image views (which were also encapsulated in RAII objects, but I totally forgot) and start working on the shader pipeline.

Very interesting tidbit - shaders are actually read in as bytecode through Vulkan programs. The actual compilation of the shaders into .sfv bytecode (SPIR-V's the name) is actually done separate from the code. This ensures much quicker loading than kid-friendly GLSL/HLSL. However, I can still use GLSL shaders as long as they are compiled into SPIR-V format.

According to this pipeline, I can fully program vertex/geometry/fragment shaders and tessellation, and also control quite a bit of the color blending/rasterization processes.

Sunday, August 7, 2016

Devil in the Details

The itty bitty and the nitty gritty keeps going at me in Vulkan. No end in sight just yet. Apart from making the mistakes of callback functions being class member functions and trying to use native GLFW code, I'm making a bit of progress.

The main big things that I've learned are that Vulkan treats GPUs (and other such objects) as physical devices. How to interact with them? Interfaces called physical devices.

What of the window interaction? There's a window surface, but don't forget the various queues for graphics, presentation, and the swap chain for images. All these require intimate and in-depth structure-based creation, with possible iteration to either find the one that can or a best one. In one case, one can look for ideal things such as geometry shader support (essential) or discrete GPU architecture (optional, but highly preferred).

As for that triangle? Welp, this is Vulkan we're talking about. We've still got views for our images on the swap chain AND creating our pipeline from the ground up. This is gonna be awhile.

The main big things that I've learned are that Vulkan treats GPUs (and other such objects) as physical devices. How to interact with them? Interfaces called physical devices.

What of the window interaction? There's a window surface, but don't forget the various queues for graphics, presentation, and the swap chain for images. All these require intimate and in-depth structure-based creation, with possible iteration to either find the one that can or a best one. In one case, one can look for ideal things such as geometry shader support (essential) or discrete GPU architecture (optional, but highly preferred).

As for that triangle? Welp, this is Vulkan we're talking about. We've still got views for our images on the swap chain AND creating our pipeline from the ground up. This is gonna be awhile.

Saturday, August 6, 2016

Vulkan's Hammer

Vulkan is a beast; that's all I can say.

I'm currently working with setting up the development environment and making sure everything's linked the way it should. This means using extensions like GLFW and libraries like GLM to build around the Vulkan code. The Vulkan code is right now starting out with creating things such as instances and devices.

This means tricky memory management! However, I built a templated class to help differentiate between destroying and cleaning up various objects' (VkInstance, VkDevice) memory upon destruction of the object.

What's next? Validations layers and debug callbacks! We'll probably turn them off when making the actual rendering in order to make it quick, which is a good thing! Bad thing is that we have to build it all from the ground up. Ugh.

I'm currently working with setting up the development environment and making sure everything's linked the way it should. This means using extensions like GLFW and libraries like GLM to build around the Vulkan code. The Vulkan code is right now starting out with creating things such as instances and devices.

This means tricky memory management! However, I built a templated class to help differentiate between destroying and cleaning up various objects' (VkInstance, VkDevice) memory upon destruction of the object.

What's next? Validations layers and debug callbacks! We'll probably turn them off when making the actual rendering in order to make it quick, which is a good thing! Bad thing is that we have to build it all from the ground up. Ugh.

Thursday, August 4, 2016

Lurking from the Shadows

Finally! I figured out what the shadow blob needed in order to show up. Originally we wanted to reduce shadowing in the project, but there was no way to completely reduce shadows unless we got rid of them altogether. Enter the shadow blob, a quick way to vary a texture placed on a quad on a surface. Thing is, I had no idea the example used world position, not relative position. This meant the blob would only be at the height of 0 in world space each time. Now we have...a shadow blob!

As for extra project work, the memory manager's taken a side, as it really doesn't need too much else. What else to work with? Why not Vulkan? It's a strange and powerful API, so it might do me well to at least learn the basics.

As for extra project work, the memory manager's taken a side, as it really doesn't need too much else. What else to work with? Why not Vulkan? It's a strange and powerful API, so it might do me well to at least learn the basics.

Wednesday, August 3, 2016

Unexpected Brainfry

Yeuch, my brain is awash. I fixed up a massive crash bug in our capstone project again (seems as if the bridge manager was casting without checking for a successful cast) and fixed a variety of little things. Missing walls, precarious perches and misplaced menu options.

As for the memory manager, I attempted to place it inside either a dynamic library or a static library; unfortunately, that class set is not usable from a dynamic linking (static helper functions only) and the attempt to move the code to a static library was...ugly. Missing assemblies and god knows what else. I managed to put it just in an example project, so I'll stick with getting the memory to work before expanding it into something linkable to other projects.

I nearly lost hope, but realized that I forgot to cast the pointer properly when subtracting the header size from it. I should definitely not make these coding sessions late in the evening.

Brain, rest!

As for the memory manager, I attempted to place it inside either a dynamic library or a static library; unfortunately, that class set is not usable from a dynamic linking (static helper functions only) and the attempt to move the code to a static library was...ugly. Missing assemblies and god knows what else. I managed to put it just in an example project, so I'll stick with getting the memory to work before expanding it into something linkable to other projects.

I nearly lost hope, but realized that I forgot to cast the pointer properly when subtracting the header size from it. I should definitely not make these coding sessions late in the evening.

Brain, rest!

Tuesday, August 2, 2016

Terrain Release

Due to today being stocked with so many final presentations, there's not too much work I was up to today.

For allocators, I created two more - a fixed-size, fixed-alignment allocator for fast and uniform allocations/deallocations, and a proxy allocator. What does a proxy allocator do? It essentially holds another allocator and provides the option to store debug information if necessary.

As for the terrain, it's finally released online! Check out my website (here) and go to my Portfolio link to download the full source.

A few more hours and this presentation extravaganza will be over...

For allocators, I created two more - a fixed-size, fixed-alignment allocator for fast and uniform allocations/deallocations, and a proxy allocator. What does a proxy allocator do? It essentially holds another allocator and provides the option to store debug information if necessary.

As for the terrain, it's finally released online! Check out my website (here) and go to my Portfolio link to download the full source.

A few more hours and this presentation extravaganza will be over...

Monday, August 1, 2016

Free the List

And yet, I am not allowed to stop capstone work just yet. There was an instance where the elevator did not hit the ground (conflicting geometry) and the insect used attacks that were de-programmed.

That said, we fixed it and the build is again ready to go!

As for memory manager work, I fixed up a stack allocator and the free list (linked list) allocator; hopefully best-fit will work better than first fit for free-block finding!

That said, we fixed it and the build is again ready to go!

As for memory manager work, I fixed up a stack allocator and the free list (linked list) allocator; hopefully best-fit will work better than first fit for free-block finding!

Sunday, July 31, 2016

Allocating the Right Way

When picking out a good set of code examples to model a custom memory manager after, one must consider what one is looking for in objectives.

For example, what use would it be to have a custom memory manager that still uses the new/delete with the OS malloc if the custom memory manager was to be built around that purpose? I almost fell into that one, but that example I came across still called new and delete on char* groups to model bytes.

Instead, I found a much better example that suggests using a customized malloc (just grabbing the data instead of using OS malloc) then applying a placement new using a templated new/delete (array included). So far I'm liking this method!

I'm also learning about byte alignment; apparently if one masks off the log2(n) least significant bits from an address, one can get the n-byte aligned address from that address. Neat!

I'm also thinking of dabbling in a variety of allocators; that way I can use the one that best fits what I'm looking for.

For example, what use would it be to have a custom memory manager that still uses the new/delete with the OS malloc if the custom memory manager was to be built around that purpose? I almost fell into that one, but that example I came across still called new and delete on char* groups to model bytes.

Instead, I found a much better example that suggests using a customized malloc (just grabbing the data instead of using OS malloc) then applying a placement new using a templated new/delete (array included). So far I'm liking this method!

I'm also learning about byte alignment; apparently if one masks off the log2(n) least significant bits from an address, one can get the n-byte aligned address from that address. Neat!

I'm also thinking of dabbling in a variety of allocators; that way I can use the one that best fits what I'm looking for.

Saturday, July 30, 2016

Just Done

Today and yesterday marked a nearly endless amount of time spent in capstone. It is done now; I may rest.

All subtitles and credits added, all missing meshes and doors fixed.

Sure, there's more to be done, but in an academic sense, I am done.

...What to do? Start up a custom memory manager!

All subtitles and credits added, all missing meshes and doors fixed.

Sure, there's more to be done, but in an academic sense, I am done.

...What to do? Start up a custom memory manager!

Thursday, July 28, 2016

Day 73: Nothing Special

Well! Tomorrow's going to be the final day for official capstone work. Oddly enough, all sorts of fixes needed put in between. Rocks were off or not fully connected, crystals were back-face culled, and the cube generator (falling blocks in the Time area) weren't using the original material. Heck, the cube generator needed some fixing since it wasn't using the original dissolve material well as is.

Unfortunately I did run into some walls, as said dissolving material doesn't apply to materials involving the character. Ah well.

Due to the final exam today, terrain work may be concluded, settling for next week's presentation. I'll have to get working on something else soon...

Unfortunately I did run into some walls, as said dissolving material doesn't apply to materials involving the character. Ah well.

Due to the final exam today, terrain work may be concluded, settling for next week's presentation. I'll have to get working on something else soon...

Wednesday, July 27, 2016

Day 72: Dreamy

Phew, through all this mesh fixing and level loading and studying, I've been fairly busy. I did manage a shader that adds on extra samples for a dreamy faze out:

I am reaching the limit, at least for quick and cheap post-processing shaders that don't require anything other than a single render target switch.

As for studying, I made sure to run through AI pathfinding techniques, perfect forwarding, memory management, saving disk space and loading, and the basic graphics techniques I've implemented in my project. I'm ready for tomorrow!

I am reaching the limit, at least for quick and cheap post-processing shaders that don't require anything other than a single render target switch.

As for studying, I made sure to run through AI pathfinding techniques, perfect forwarding, memory management, saving disk space and loading, and the basic graphics techniques I've implemented in my project. I'm ready for tomorrow!

Tuesday, July 26, 2016

Day 71: Ew, Studying

I've finally hit the point in capstone where I will no longer be adding new features! Woo!

I will be fixing things, especially the aftermath of instancing. On the bright side, it allows me to remesh some areas that I did not consider; previous passes of these levels involved nearly 30+ rocks for a single ceiling (!!!) instead of a single or multiple ceiling tiles, in the case of an a aperture.

I also managed to solve that pesky problem (eh) of people seeing through the character with the camera; when the camera gets too close, she turns invisible! It required an extra line trace instead of simple distance calculation due to the span of her capsule.

As for terrain, I will be adding new effects when I can; however, a large exam is approaching and I'm preparing the presentation of said terrain. So many things to cover! Hopefully I can give a simple enough explanation of Simplex Noise.

I will be fixing things, especially the aftermath of instancing. On the bright side, it allows me to remesh some areas that I did not consider; previous passes of these levels involved nearly 30+ rocks for a single ceiling (!!!) instead of a single or multiple ceiling tiles, in the case of an a aperture.

I also managed to solve that pesky problem (eh) of people seeing through the character with the camera; when the camera gets too close, she turns invisible! It required an extra line trace instead of simple distance calculation due to the span of her capsule.

As for terrain, I will be adding new effects when I can; however, a large exam is approaching and I'm preparing the presentation of said terrain. So many things to cover! Hopefully I can give a simple enough explanation of Simplex Noise.

Monday, July 25, 2016

Day 70: A Variety of Pretty Things

Seems like instancing meshes led to some strange complications. Some of the meshes became transparent, oh no! Or back-face culled. I just replaced it with a working mesh and it worked fine; it also allowed me to reduce the amount of meshes in the scene, replacing a rock where 15 or so filled up a small hole.

As for the terrain, post-processing filters are in the work!

Pixelation allows people to sample colors divided across an image, much like point filtering:

We can also split the image into color tones for a posterized effect:

By also reducing the colors and crosshatching where luminosity is darkest, we get a strange but cool cross-hatching:

By experimenting with cross sampling using kernel matrices, we can also use edge detection and even blurring with small enough values:

I sure do like post-processing!

As for the terrain, post-processing filters are in the work!

Pixelation allows people to sample colors divided across an image, much like point filtering:

We can also split the image into color tones for a posterized effect:

By also reducing the colors and crosshatching where luminosity is darkest, we get a strange but cool cross-hatching:

By experimenting with cross sampling using kernel matrices, we can also use edge detection and even blurring with small enough values:

I sure do like post-processing!

Sunday, July 24, 2016

Day 69: Swirly

Aha! So it is possible to switch between shaders on the fly! After redoing a bit of code to allow pixel shader re-compilation, I managed to be able to switch between shaders by the hit of a key.

As for more shader research, I managed to figure out a neat swirling effect. If one takes the percent that the texture is from a set radius (within, for example), turns it into an angle and computes a new texture coordinate based on the dot product of the texture coordinate and the sine and cosine of the that angle, one gets a swirling effect:

The tricky part about this shader research is figuring out what takes no real parameters in the calculation; it would be tricky to add other textures and parameters without using them in the other shader functions.

As for more shader research, I managed to figure out a neat swirling effect. If one takes the percent that the texture is from a set radius (within, for example), turns it into an angle and computes a new texture coordinate based on the dot product of the texture coordinate and the sine and cosine of the that angle, one gets a swirling effect:

The tricky part about this shader research is figuring out what takes no real parameters in the calculation; it would be tricky to add other textures and parameters without using them in the other shader functions.

Saturday, July 23, 2016

Day 68: For Instance

Phew! Looks as if I found a stroke of luck with Unreal plugins. Thanks to the use of a Instanced Static Mesh editor, I can take the myriad of different meshes in the scene and transform them into a single instanced mesh. I expect some frame rate improvements, but not by much.

As for the terrain application, I managed to get a few more effects from post-processing.

First off, managing brightness by way of a simple multiplier:

Why did the text get dark? I learned that UI does not apply to post-processing the scene, so I placed the rendering of the UI after the post-processing quad.

Second, some color shifting, including a grayscale smudge and a more vibrant shifting effect:

What's next? Definitely more post-processing, though I should find a way to swap out shaders to make the application more data-driven.

As for the terrain application, I managed to get a few more effects from post-processing.

First off, managing brightness by way of a simple multiplier:

Why did the text get dark? I learned that UI does not apply to post-processing the scene, so I placed the rendering of the UI after the post-processing quad.

Second, some color shifting, including a grayscale smudge and a more vibrant shifting effect:

What's next? Definitely more post-processing, though I should find a way to swap out shaders to make the application more data-driven.

Friday, July 22, 2016

Day 67: Solutions Galore

Today wasn't too much of a day for capstone work due to meetings and playtests, but we've come across an answer to one of our big issues! Seems as if the cinematic bridge formation near the end of our game wasn't forming because it was in the middle of creating an old bridge from a leftover piece and making a starting bridge point. Tomorrow we fix the big problem!

As for rendering to texture, I did manage to finally get rendering to texture to work. Oddly enough, the screen width and height actually made the quad close up so much as to blur it beyond distinction:

Once the width/height was set down to 2 (?!?), the quad appeared appropriately and allowed me to calculate a simple dot product for some grayscaling:

Now we get to some interesting effects!

As for rendering to texture, I did manage to finally get rendering to texture to work. Oddly enough, the screen width and height actually made the quad close up so much as to blur it beyond distinction:

Once the width/height was set down to 2 (?!?), the quad appeared appropriately and allowed me to calculate a simple dot product for some grayscaling:

Now we get to some interesting effects!

Thursday, July 21, 2016

Day 66: Crushing Failure

Today didn't work out so well for the water texture. What I thought would be a simple addition turned into a myriad set of complex steps that led me astray. I'll be pulling back a bit and focusing directly on render-to-texture in DirectX; hopefully I can get some post-processing effects up and running.

As for capstone, the new bridge effect is in! I had to tweak some things such as which specific world coordinate (Y, not X) to pan, but it works all well now. I also had to increase the speed of panning, which I used by multiplying the time value and the texture for panning by a set number for speed. Changing the period of the time input changed how long it would take the function to repeat, but this would only interrupt the pan at small periods. With the new multiplication, one can quickly pan the function across independently of the period.

As for capstone, the new bridge effect is in! I had to tweak some things such as which specific world coordinate (Y, not X) to pan, but it works all well now. I also had to increase the speed of panning, which I used by multiplying the time value and the texture for panning by a set number for speed. Changing the period of the time input changed how long it would take the function to repeat, but this would only interrupt the pan at small periods. With the new multiplication, one can quickly pan the function across independently of the period.

Wednesday, July 20, 2016

Day 65: Rabbit Watering Hole

Phew, this water code is definitely more in-depth and myriad than a small add-in. Seems as if there has to be a separate shader created for reflection/refractions when rendering to texture, which takes into account a clipping plane.

Other than that busy work, capstone was mainly integration of things such as a totem pole for large state change cube pillars (taking the cube, making it invisible, and making a stand-in mesh around the pieces) and matinee skipping that requires a held input. It required some level blueprinting, but I got a similar thing to work for the cinematic.

Man, busy-work can definitely be unrewarding in terms of visuals. Here's a totem pole for your thoughts!

Other than that busy work, capstone was mainly integration of things such as a totem pole for large state change cube pillars (taking the cube, making it invisible, and making a stand-in mesh around the pieces) and matinee skipping that requires a held input. It required some level blueprinting, but I got a similar thing to work for the cinematic.

Man, busy-work can definitely be unrewarding in terms of visuals. Here's a totem pole for your thoughts!

Tuesday, July 19, 2016

Day 64: Refractory

Phew, today's taken a lot out of me. I'm not sure, but I'm quite tired from the day. Regardless, I managed some more fixes, including segments where cinematic bridges wouldn't form (not setting a self destruct timer instead of setting the time to zero) and properly setting the fullscreen on the build to not become windowed (resolution setting function instead of fullscreen toggle).

As for the water, I'm managing. I created the render texture class to capture a render target, but the setup along with the water shading and texture is going to be awhile.

As for the water, I'm managing. I created the render texture class to capture a render target, but the setup along with the water shading and texture is going to be awhile.

Monday, July 18, 2016

Day 63: Cinematory

Today was another big build day, but I did manage to add some more content! This came in the form of cinematics; I took an animation sequence (think of a motion graphic) and added it to the game in the form of cinematic levels. Some things got tricky, like setting specularity to zero on textures since the default wasn't zero and making sure the full-screen command was only called once, but it managed to go through well:

As for terrain work, I've decided to tackle water for something big enough to wrap it up. Hopefully just calculating refraction won't be too expensive!

As for terrain work, I've decided to tackle water for something big enough to wrap it up. Hopefully just calculating refraction won't be too expensive!

Sunday, July 17, 2016

Day 62: Jumpin' Jumpin'

What does one do with the terrain project when there's nothing better to do? Jump!

I also managed some more optimizations; since I realized a lot of the data in some of the constant buffers is constant across all applications, I could essentially save the cost of mapping/unmapping data buffers with data every frame in the Skydome and Terrain values.

But honestly, what is left to do? Water? Water perhaps!

I also worked on some design fixes and preventing crashes in the capstone project, namely that the Warp room had some very strange crashing based on dissolving cubes in and out of view. I managed to stick respawning and dissolving properly based on volumes and making sure to destroy objects and remove them if they were really dissolving; that was something that the original component did not have.

I also managed some more optimizations; since I realized a lot of the data in some of the constant buffers is constant across all applications, I could essentially save the cost of mapping/unmapping data buffers with data every frame in the Skydome and Terrain values.

But honestly, what is left to do? Water? Water perhaps!

I also worked on some design fixes and preventing crashes in the capstone project, namely that the Warp room had some very strange crashing based on dissolving cubes in and out of view. I managed to stick respawning and dissolving properly based on volumes and making sure to destroy objects and remove them if they were really dissolving; that was something that the original component did not have.

Friday, July 15, 2016

Day 61: Fifteen Builds

Phew! Today was a busy day in preparing the builds for tomorrow's playtest. Turns out a whole lot of things like looping audio, flipping platforms, and glass that wasn't orange enough were fixed just in time.

As for the terrain, I managed some loading files for sky and texture data. Oddly enough, std::getline was a choice over fstream, but I had to be very careful not to leave any space from the colon to the texture file.

Now I can control plenty! I may even put in a jump when I get the chance. Basic physics might be something manageable.

For now, skies and clouds can be a variety of values!

As for the terrain, I managed some loading files for sky and texture data. Oddly enough, std::getline was a choice over fstream, but I had to be very careful not to leave any space from the colon to the texture file.

Now I can control plenty! I may even put in a jump when I get the chance. Basic physics might be something manageable.

For now, skies and clouds can be a variety of values!

Thursday, July 14, 2016

Day 60: The Terrifying Shipping Build

There are some things unexplained by science and human reason...and those things are crashes in the Shipping Build.

Oddly enough, this expanded far enough out to the Development Build. During the spawning of enemies in the project, there was no specific check for whether the class was available (i.e. existed at that point) for spawning, leading to a crash when the spawner was activated. Oddly enough, the editor also claimed some odd ownership over the build even after it was packaged; closing the editor and then running fixed the issue.

The build also doesn't allow a full-screen settings style file like in DefaultGame.ini or DefaultEngine.ini; the system requires I set console commands in the GameModes upon beginning play, or setting the user settings through code. Also weird.

As for not weird things, I did a little more progress on the terrain project; turns out that by loading data once in the constant buffers, I had no need to set their parameters frame-by-frame like in the LightBuffer. Yet another optimization!

Next up? The sky could use some data driving, which may lead to further optimization.

Oddly enough, this expanded far enough out to the Development Build. During the spawning of enemies in the project, there was no specific check for whether the class was available (i.e. existed at that point) for spawning, leading to a crash when the spawner was activated. Oddly enough, the editor also claimed some odd ownership over the build even after it was packaged; closing the editor and then running fixed the issue.

The build also doesn't allow a full-screen settings style file like in DefaultGame.ini or DefaultEngine.ini; the system requires I set console commands in the GameModes upon beginning play, or setting the user settings through code. Also weird.

As for not weird things, I did a little more progress on the terrain project; turns out that by loading data once in the constant buffers, I had no need to set their parameters frame-by-frame like in the LightBuffer. Yet another optimization!

Next up? The sky could use some data driving, which may lead to further optimization.

Wednesday, July 13, 2016

Day 59: Constantly Buffer

Today was a day to kickstart more data-driving. I learned some interesting rules about HLSL constant buffer structure, mainly the fact that it defaults to 16-byte aligned. That way, I couldn't do a struct with one XMFLOAT2 value and four floats without some padding.

However, we can now control some more in depth parts of the terrain, including heights and levels of depth:

As for capstone, I noted an odd thing during the Shipping build - setting a Material Instance Dynamic as a parameter for calculating during program begins actually doesn't register during the build, resulting in some nasty crashes. Setting the parameter to an editable UMaterial value fixed it up, but it still reminds me how things can work in the editor and Dev builds but not work at all in Shipping.

However, we can now control some more in depth parts of the terrain, including heights and levels of depth:

As for capstone, I noted an odd thing during the Shipping build - setting a Material Instance Dynamic as a parameter for calculating during program begins actually doesn't register during the build, resulting in some nasty crashes. Setting the parameter to an editable UMaterial value fixed it up, but it still reminds me how things can work in the editor and Dev builds but not work at all in Shipping.

Tuesday, July 12, 2016

Day 58: Pressing Buttons

Welp, with most of the terrain work being more than complete, I've gotten back to data-driven and input application. Now we can turn things such as outlining, wireframing, and height locking on and off at will and designate slope values for the tiled noise:

As for capstone, the build functions alright; I noticed, however, that the Shipping build crashes with debug text. Very odd observation.

As for capstone, the build functions alright; I noticed, however, that the Shipping build crashes with debug text. Very odd observation.

Monday, July 11, 2016

Day 57: The Trees Move With Us

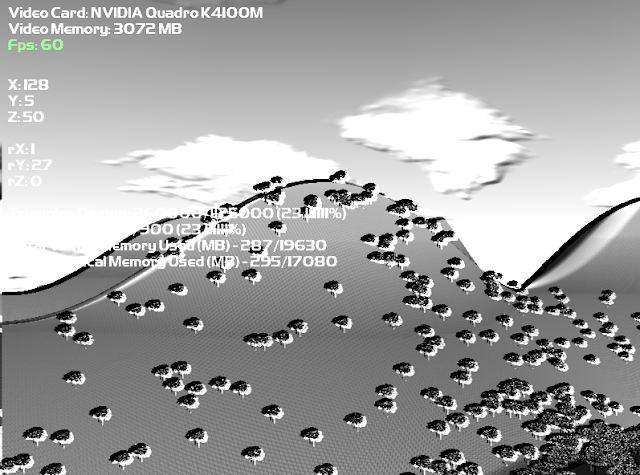

Managed a bit more work, even as the ideas are rolling thin for the terrain. Turns out the billboards didn't actually move with the terrain as it streamed in, so I figured out a way for the billboards to quickly shift over. Whenever the terrain shifts to another central terrain, I do a quick check on all the billboard instances. If the billboard instances are not on any terrain, it shifts toward the direction that the central terrain moved to.

I also found that with the slight gap of terrain that is covered up but still shows up when moving quads, I had to add a slight additional tolerance so the trees would still remain in the ground. Otherwise, it would've been expensive height checking and re-adjustment. I am quite thankful for tiled terrain:

As for capstone work, it was mainly composed of refactoring; there's a lot of old classes we aren't using anymore. I'm also doing some investigative work on why metrics are picking up low times for area completion in specific areas. Seems as if there are moments where the data picks up sessions with absolutely no time spent in them, so I'll leave those out of the equation.

I also found that with the slight gap of terrain that is covered up but still shows up when moving quads, I had to add a slight additional tolerance so the trees would still remain in the ground. Otherwise, it would've been expensive height checking and re-adjustment. I am quite thankful for tiled terrain:

As for capstone work, it was mainly composed of refactoring; there's a lot of old classes we aren't using anymore. I'm also doing some investigative work on why metrics are picking up low times for area completion in specific areas. Seems as if there are moments where the data picks up sessions with absolutely no time spent in them, so I'll leave those out of the equation.

Sunday, July 10, 2016

Day 56: Spreading a Little Thin

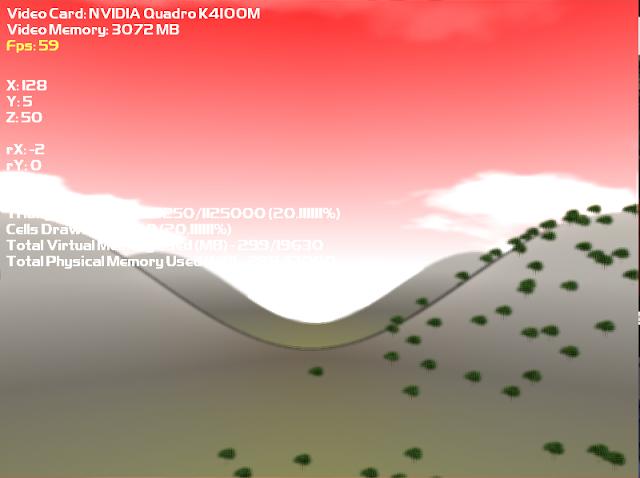

Today was mainly a day to..well...not sure. I'm fairly certain I'm hitting the limits of technical coolness for this terrain here. I managed to do a pretty nice optimization; it seems that by only checking if the player is in a certain terrain (instead of checking a bunch of cells, treating the terrain as a single cell), I could get the frame rate up to snuff with a 3x3 of cells continuously tiling into the horizon:

I also spread out the clusters of billboards so they'd cover the whole 3x3 set instead, but nothing yet on them following the player.

As for capstone, I did manage to do some bits and pieces for fixes. I feel like I'm doing plenty of design (or correcting design) work instead of programming, but somebody's gotta do em'.

I also spread out the clusters of billboards so they'd cover the whole 3x3 set instead, but nothing yet on them following the player.

As for capstone, I did manage to do some bits and pieces for fixes. I feel like I'm doing plenty of design (or correcting design) work instead of programming, but somebody's gotta do em'.

Saturday, July 9, 2016

Day 55: Feel the Infinite

For capstone, today was mainly just a set of content fixes. It's almost terrifying to look away for a few days and come back to a multitude of lighting, build, and import errors. All fixed up, though!

The exciting part was the terrain; by hooking up a temporary vector swap and height check, I can effectively "load" in pieces of terrain by consistently centralizing the surrounding terrain over a current central terrain that the player is on:

Frame rate's a bit chuggy with all 9 terrain pieces, but it goes on infinitely! Now I just gotta figure out a good way to optimize all this...

The exciting part was the terrain; by hooking up a temporary vector swap and height check, I can effectively "load" in pieces of terrain by consistently centralizing the surrounding terrain over a current central terrain that the player is on:

Frame rate's a bit chuggy with all 9 terrain pieces, but it goes on infinitely! Now I just gotta figure out a good way to optimize all this...

Friday, July 8, 2016

Day 54: The Answer

Woo! It looks like the issue with the terrain was due to the cells cutting off the last parts of the vertices of the model data. Problem is, even the terrain calculations cut off the last bit. What to do? Even with the exact coordinates, the data always misses one quad of terrain.

However! What if the original sampling of positions goes one quad over, using the first quad as a terrain wrap?

Now we can render gigantic, if not infinite sections!

Well, the idea is to take a section of terrain and place it in front of the other section for a so-called infiniscape. Let's see how that goes!

However! What if the original sampling of positions goes one quad over, using the first quad as a terrain wrap?

Now we can render gigantic, if not infinite sections!

Well, the idea is to take a section of terrain and place it in front of the other section for a so-called infiniscape. Let's see how that goes!

Thursday, July 7, 2016

Day 53: The Problem

Blargh, I'm so hungry. I've been trying to crack this here nut, but the bug's deeper than I thought.

When I was using regular noise with turbulence, I didn't notice that the cells weren't actually covering the entire terrain. Gasp! Where are these extra bits leaving off?

Seems as if my cell calculation process actually doesn't handle the last parts at the end, meaning that I must figure out how to get the vertices exactly on spot to cover the entire mesh.

There's no way to tile terrain at this rate!

When I was using regular noise with turbulence, I didn't notice that the cells weren't actually covering the entire terrain. Gasp! Where are these extra bits leaving off?

Seems as if my cell calculation process actually doesn't handle the last parts at the end, meaning that I must figure out how to get the vertices exactly on spot to cover the entire mesh.

There's no way to tile terrain at this rate!

Wednesday, July 6, 2016

Day 52: The Fourth Dimension

Whoooo! Turns out that I needed to completely rethink (i.e. redo) the noise that I did; turns out that only a special type of noise can be tiled in order to create what I seek.

4D Simplex Noise! Turns out that Perlin noise has a much higher computational complexity (2^n?!?!) at higher dimensions compared to Simplex (n^2). By skewing the coordinates (4 of them!), finding the specific simplex (a sequence of mutually orthogonal edges), and hashed in a pseudo-random gradient direction.

Did that sound ridiculously complex? Yes, yes it was.

But now we have noise that can be tiled (using the power of two circles orthogonal in 4D space):

Man, don't that just look simple. It tiles, so that's important; I'll be working on seeing if I can use turbulence or something to get it looking more natural.

As for capstone, we've got more pretty things integrated in! The podiums needed a bit of work, including a set of pieces that get moved by gravity in the podium without actually falling to the ground and bridges spawning in the correct place, but it all ended up alright! We also have a new platform that required fortunately little material work.

Let's get that simplex noise less simple!

4D Simplex Noise! Turns out that Perlin noise has a much higher computational complexity (2^n?!?!) at higher dimensions compared to Simplex (n^2). By skewing the coordinates (4 of them!), finding the specific simplex (a sequence of mutually orthogonal edges), and hashed in a pseudo-random gradient direction.

Did that sound ridiculously complex? Yes, yes it was.

But now we have noise that can be tiled (using the power of two circles orthogonal in 4D space):

Man, don't that just look simple. It tiles, so that's important; I'll be working on seeing if I can use turbulence or something to get it looking more natural.

As for capstone, we've got more pretty things integrated in! The podiums needed a bit of work, including a set of pieces that get moved by gravity in the podium without actually falling to the ground and bridges spawning in the correct place, but it all ended up alright! We also have a new platform that required fortunately little material work.

Let's get that simplex noise less simple!

Tuesday, July 5, 2016

Day 51: Expansion Pack

Time for some more data-driven evidence! Now we can easily switch out algorithms and values on the fly, allowing no compilation to go from this:

To this:

What's the real aim today, though? Making more than one terrain. If you thought this terrain took several weeks to manage, this one'll be a doozy. I'm making it so that multiple terrain object can be created as we speak. Right now the terrain will share one neighbor, but if I can blend both to look natural then we have an advantage:

Note - the displaced terrain can only be shown one at a time. Working on getting both versions!

To this:

What's the real aim today, though? Making more than one terrain. If you thought this terrain took several weeks to manage, this one'll be a doozy. I'm making it so that multiple terrain object can be created as we speak. Right now the terrain will share one neighbor, but if I can blend both to look natural then we have an advantage:

Note - the displaced terrain can only be shown one at a time. Working on getting both versions!

Subscribe to:

Comments (Atom)